Quantum mechanics

If you think you understand quantum mechanics, you don't understand quantum mechanics.

Richard Feynman

Quantum mechanics – a scientific theory that explains how our world works on a micro-level. Was born in the beginning of the 20th century, by definition is the antipode of the theory of relativity. Consists of math, cognitive dissonance, holy wars and schrodinger cats, and for that reason is often called “Picasso of physics”.

QM is related to processes like superconductivity and superfluidity, practical realization of which will allow in the nearest future building blasters, teleportation, anti-gravity and other cool shit we saw in our favorite sci-fi movies.

History

Enter black body

Europe was just wrapping up the Renaissance and physics was only forming as a separate science. First physicists worked out the mechanics with its levers, cogs and celestial bodies. Next item on the agenda were molecules, and luckily for the scientists almost all of thermal processes turned out to be simply mechanical. It was a very epic win. The energy of mechanical movement of molecules allowed for steampunk engines, Boyles with Mariottes and Carnot cycles. This happy state of affairs was called classical physics. It seemed like it would last forever and everything was already discovered.

Nobody saw the trouble coming.

It would seem that knowing so much about energy and molecules, it would be really easy to explain why a thingamajig heated up to a 1000 degrees glows red and to 9000 – blue. But no, this simple question caused a lot of brain damage among 19th century physicists. This formed a paradox: classical physics crumbled trying to calculate the total energy of electromagnetic radiation inside an encapsulated enclosure (black body). The calculations showed that if the System isn’t lying, then the total radiation energy of any black body must be infinitely large, hinting that things aren’t as simple as they thought. There were two laws at the time that physicists used to explain what was happening: Rayleigh–Jeans law (coincided with experiment in the low-frequency spectrum, but parted into infinity as the frequency increased) and Wien approximation, which failed the opposite way. The problem was given an ominous name “ultraviolet catastrophe”.

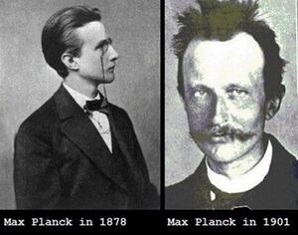

The first man to come up with a fix was Max Planck. He combined the aforementioned equations into one and suggested that the energy of electromagnetic wave can be radiated/absorbed only by whole portions, although he didn’t bother to explain how or why this happens. Indeed, if the cavity of a black body is enclosed and in thermal equilibrium, then there could only be stationary standing waves inside it. In order for that to happen, their nodes should lie on the walls of the body, and therefore the wave should consist of an integer number of half-waves. The confusing part was that according to Planck the energy of the base half-wave should be multiple of some small constant, and the minimal energy it carries – proportional to its frequency. Planck was the first whose brain became a victim of quantum mechanics and until his death he refused to believe in this heresy. Nevertheless, his equation worked with stunning accuracy, and he was awarded the Nobel prize for this discovery in 1918. What’s interesting, is that Planck’s theory didn’t arouse much interest among his colleagues at first, and only a few years later when the problem of photoelectric effect emerged it started getting attention.

Planck really pissed off everybody with his discovery, including himself. Maxwell didn’t have any portions in his theory, nobody could understand why the energy of a wave should be discrete, except that it worked. Unraveling the mysterious physical meaning of Planck's constant took many decades.

Photoelectric effect

Meanwhile in Soviet Russia Aleksandr Stoletov was studying photoeffect – emission (external) or redistribution among energy states (internal) of electrons caused by electromagnetic radiation (light) with increase in material’s conductivity. As classical physics would suggest, photoelectric current is proportional to the intensity of light (wave amplitude). BUT! Stoletov figured out to switch battery polarity in his setup and noticed that if you slowly raise voltage then the photocurrent will stop at a certain voltage and what’s important is that this voltage depends on light’s wavelength and electrode’s material and DOESN’T depend on light’s intensity. Also if the electrode was irradiated by very red and infrared (high wavelength) light – photoelectric effect didn’t take place at all, and again to hell with intensity. That finding sent Stoletov into a stupor, since according to classical physics the important parameter was amplitude-intensity, not frequency. Also it should have taken time for a wave to loosen and knock out an electron, but in the experiment the electrons jumped out momentarily.

The uncomfortable question got hung in the air for five years.

In 1905 Einstein finally found a solution to the problem, for which he received his Nobel trophy in 1921 (and not for the theory of relativity, like many think). While studying photoeffect he decided to apply Planck’s discrete wave energy model to light. He suggested that light represents a stream of microscopic particles (photons) and the energy of each photon is proportional to the light wave’s frequency. So Einstein showed that Planck hypothesis about energy discreteness reflects a fundamental property of electromagnetic waves: they consist of particles – photons, which represent small portions or quanta of light.

And suddenly everything became much worse than before.

“Wave vs particle” holy war

The idea to view light as a stream of particles was first offered by Newton. He was opposed by dutch physicist Christiaan Huygens who argued that light is a wave. In the beginning of the 19th century experiments by limey physicist Thomas Young proved that Newton was wrong and light is a wave.

Then Einstein came with his photoeffect and photons-quanta. Physicists harmoniously facepalmed (as they always did when Einstein showed up). And were right to do so, as experiments actually revealed that something weird was going on. In the most shocking scenario, that to this day rattles first-year physics students, a single set of instruments shows the world consisting of small balls-particles, and the same set but arranged in a different manner shows the world made of electromagnetic waves. Any person not suffering from advanced psychosis would likely assume that X (whatever it is) should “be” either a particle or a wave, and can’t “be” both a wave and a particle depending on how we look at this X.

In 1923 young french aristocrat Louis de Broglie suggested that wave–particle duality was inherent to all microparticles, not just photons. It suddenly turned out that mass must have wave implementation, and therefore surrounding us matter is both waves and particles at the same time, and whoever doesn’t like it can kill himself. The scientists continued clucking “what the fuck?”, only this time mostly to themselves, and the most sly started acting like they understood everything.

The fact of the matter is both waves and particles are simply approximations, an attempt to mathematically explain the surrounding world. In reality everything is much more complicated and nobody can explain exactly what surrounds us, except you know who. And so, my little bipedal friend, to save yourself from brain injuries, consider this:

- While light (any EM radiation) travels through space it behaves like a wave – is subject to interference, diffraction, polarization. Photon-photon interaction doesn’t exist (actually it does, but only in high-energy fields) and Maxwell’s theory is linear.

- But when light interacts with matter, is being emitted or absorbed – it has to be considered a stream of particles and quantum effects begin to show their trollface. Simplest occurrence – scattering of photons by electrons (Compton effect).

Subj

Another problem arose in 1911 when racial british physicist Rutherford discovered that an atom is nothing like Thomson’s blueberry muffin like it was thought before, and is actually almost empty. The atom consists of a small nucleus with the electrons spinning around it like crazy at a large (relative to the size of the nucleus ) distance (planetary model). The discovery was so earth-shattering, that he kept his mouth shut for two years, while taking 9000 of measurements, then re-measurements, then checking the re-measurements, then re-checking the checkings. The thing is, according to electrodynamics an electron orbiting around a nucleus must radiate (any movement following a closed trajectory is by definition accelerated, which adds radiating terms to Maxwell’s equations) and as a result lose energy while slowly falling on the nucleus. This means that our world doesn't have the right to exist, and right now, in ten nanoseconds must come to an atrocious violent gruesome petrifying end. But then Niels Bohr came – Rutherford’s student by the way – and said, let’s quit fucking around and assume there’s something we don’t know yet, and Armageddon was postponed until further notice.

To explain the structure of atom Bohr suggested the existence of stable orbits, in which electron doesn’t radiate and is only allowed to possess a certain discrete amount of energy. This approach further developed by Arnold Sommerfeld and few others is sometimes referred to as the old quantum theory (1900 – 1924). In 1925-1926 the foundation for consistent quantum theory was laid in quantum mechanics, containing new fundamental laws of kinematics and dynamics math.

Spherical QM in a vacuum

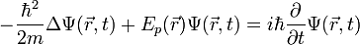

Schrodinger equation makes this article look smarter than it actually is. Paradoxically, Schrodinger, who basically invented QM, considered both Bohr’s idea of “sudden quantum leaps obeying probability laws” and Heisenberg’s “q-numbers” gibberish. He was trying to give the idea of waves of matter a solid objective basis.

Shrodinger almost smashed himself against the wall trying to give birth to the equation. He was interested in Hamilton's principle of least action – if you launch a ball on a curved tilted surface it will roll on the trajectory of least action (integral of Lagrangian function along the trajectory). The same is true for a light wave propagating through inhomogeneous optical medium, and for electric current.

Shrodinger noted that the equation describes specifically this principle of wave propagation. Initially this idea was named “wave mechanics”, and later as a result of crossbreeding with Bohr’s and Planck’s quantum theory and Heisenberg’s “matrix mechanics” produced in 1925 modern nonrelativistic concept of “quantum mechanics”.

Uncertainty principle

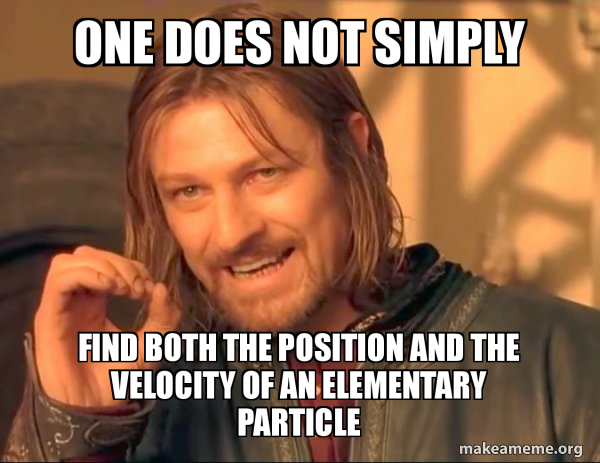

Heisenberg's uncertainty principle plays a key role in QM, because it shows exactly how microworld is different from our habitual material world.

When we measure position and speed of an object in the normal world, we don’t affect it . So ideally we can establish its exact speed and coordinates (in other words with zero uncertainty). In the quantum world, however, every measurement affects the system (since the measurement itself utilizes quanta interacting with the measured particle). The very act of measuring the location of a particle causes its speed to change unpredictably and vice versa.

Actually if we managed to measure with absolute certainty one of the parameters, the uncertainty of the other one would become infinite, and we would know literally nothing about it. In other words, if we absolutely precisely measured the coordinates of a particle we would know nothing of its speed, and if we precisely measured its speed, we would have no idea where it is.

Some might say, what’s the big deal? Nevertheless, the uncertainty principle leads to some very peculiar theories (see below).

Wave–particle duality

“Wave vs particle” holy war has been going on for 400 years. It began with Newton’s corpuscular theory. Then Huygens showed that light acts as a wave, but due to Newton’s authority the posse continued to consider light as particles for another hundred years until Young conducted his double-slit experiment in the beginning of the 19th century while Fresnel refined Huygens’s wave theory. In the original experiment light passes through two slits and falls on a screen, producing alternating bright and dark bands (interference fringes). It can be explained by waves either adding or canceling each other. “Oh, well” – said the posse and started considering light a wave in ether. Until Planck – almost another century later – came up with his quanta to avoid ultraviolet catastrophe, and Einstein used them to explain abnormal photoeffect. Also, de Broglie in his moment of clarity declared that not only light is both a wave and a particle, but everything else as well, for example, an electron. And to prove his point calculated its wavelength.

In the 1920s Davisson and Germer dared to shoot a bunch of electrons into a crystal (electron’s wavelength turned out to be so small that the only thing that could act as a diffraction grating was the crystal's periodic structure). It wasn’t a big surprise that they saw a diffraction pattern. Proponents of QM continued knitting math for it, and the opponents helped them: besides Einstein, herr Shrodinger with his equation and a cat to troll the adversaries. The equation was greatly appreciated, the cat – not so much (people weren’t so stoked on cats in preyoutubic times).

The result was this. Imagine the original Young’s experiment with two slits. “What happens if we put detectors next to both slits and try to catch a quantum when it goes through?” According to QM, quantum will always be caught coming out of one slit, and never both (since it is by definition indivisible). And yes, interference fringes disappear, replaced by normal pattern. But that’s not all – are you watching closely? What if we install only one detector next to one slit? Even if a quantum wasn't caught by a detector (went through another slit), interference fringes still disappear (it means that the quantum “figured out” that it’s being detected at another slit and refused to interfere with his rat counterpart, cunningly acting like a particle instead of a wave).

And as a final nail in the coffin of common sense: if we take measurement after a quantum passes the slits but before it hits the screen, the sneaky quantum again starts acting like a particle. It behaves as if it went back in time (sic!) and passed only through one slit instead of both, like it was never a wave at all (for more details oogle delayed-choice experiment). Of course it was possible that in Davisson’s experiment electrons were interacting with each other in some tricky way, that produces something that looks like a wave. In 1947 comrade Fabrikant figured out how to shoot electrons one at a time through a crystal. A single electron passed through a crystal and landed somewhere on a screen. Then another one, and another one. And, as if with a wave of a magic wand, the diffraction pattern appeared on the screen. But nobody gave a shit at this point, since light interference was already observed even with not particularly monochromatic light, where each photon has slightly different wavelength and phase. Which means that interference was a result of photons interacting with themselves, not their neighbors. That’s why Davisson got a Nobel prize and Fabrikant – a bag of dicks.

It is important to remember, that the bigger the system, the more it is subjected to external forces. In large complex systems consisting of many billions of atoms decoherence (reduction of superposition to a mixture of states) happens almost instantaneously and therefore the infamous Shrodinger’s cat can’t be both dead and alive on any measurable time interval.

Probabilistic nature of predictions

The fundamental difference of quantum mechanics from classical is that its predictions are always probabilistic. It means that we can never predict with absolute certainty where, for example, the electron will hit in the previous experiment, no matter how sophisticated and precise our detectors are. We can only calculate the chances of hitting a certain spot on a screen utilizing terms and methods of probability theory, which is designed to analyze uncertain situations.

In QM any state of a system is described through a so-called density matrix, but unlike classical mechanics this matrix defines the parameters of its future state only to a certain probability. The most important philosophical conclusion from QM is fundamental uncertainty of the results of measurement, and therefore impossibility of future predictions. In other words, it gave certain people means to finally exorcize the Laplace's demon, who was fucking with their entire philosophy.

This combined with Heisenberg’s uncertainty principle and some other mindblowing theoretical and experimental findings led some scientists to give up on classical physics and move to the mountains and raise sheep suggest that microparticles don’t have any internal properties at all, and they only show during measurement. Those who suffered the most PTSD even suggested the role of the experimenter's consciousness is the key to the existence of the entire universe, because according to quantum theory it is the observation that creates or partially creates the observed.

Other nerds unwilling to accept that everything works but nobody knows how, toiled at so-called “hidden-variable theories”. The idea behind them is the opposite to the previous assumption: let’s assume that probabilistic nature of predictions is caused by some internal properties of particles that we don’t know about, and if we did – we could at least explain why this is happening. The thing is, quantum mechanics in its purest form is a study of electron’s movement, and that’s it. It can’t be used to judge any internal properties of observed particles. Hundreds of scientists across the world are still trying to solve the problem of electron density. Hence the question, “how is it really working on the inside?”. The simplest of this ideas were annihilated by the Bell inequality (see below).

Quantum nonlocality

The phenomenon of quantum nonlocality causes the existence of correlation between states of entangled subsystems no matter how far they are from each other. Therefore it is possible to instantaneously identify a quantum state in one location at any distance by measuring the state entangled with it at another location, and as a result pass the information at infinite speed – quantum teleportation.

Even though entangled quanta correlate instantaneously, it is still impossible to transfer information this magical way (sci-fi fans cry bloody tears). Measuring the quantum state and correlating it to the entangled quantum still requires time and common communication devices. Nevertheless, the mere fact that two particles, no matter how far they are removed from each other, can still, albeit in a specific way, instantaneously affect each other, made certain scientists shit train cars of bricks.

In human language, it is the same as if we launch on two computers at the same time the same random number generator, only the results of the second one are multiplied by -1. So, no matter where we take the second computer, we can use it to easily restore the random numbers from the first computer. But since the numbers are random, we can’t use it to pass intelligible information in any direction.

Even Einstein’s twisted brain couldn’t accept that, notorious in scientific circles holy war began.

Solution was found in the no-cloning theorem.

Quantum holy war

Einstein vs quantum mechanics

So, Einstein didn't get to be proud of blowing everyone’s mind with his relativity for too long. In his megatheory he buried Newton’s classical mechanics on macro scale, replacing it with something a bit more precise, and could’ve easily spent the rest of his life calling everyone around him cunts, but other nerds with equal zeal already began creating quantum mechanics, which in turn made Einstein exclaim: “whatta fuck?”

When QM was born even its creator Max Planck never really accepted the entire quirkiness of this science. Einstein simply considered subj an absurd theory, called it “madness”. The crisis of perception was so deep, because with the invention of QM physicists completely lost ground under their feet in the form of visual schematics and intelligible interpretations. More and more physics became math, i.e. abstract equations, that sometimes weren’t even verifiable experimentally, and occasionally produced absurd results.

It started one of the bloodiest holy war in history of physics, during which eminent scientists trolled each other with great virtuosity, and those who didn’t understand anything about QM simply stockpiled popcorn.

In the corner of Einstein and Truth viciously fought physicists like Planck and Shrodinger, and the arch villain on the side of QM was Niels Bohr with the help of Heisenberg, Born, Jordan, Landau and other scientists with enhanced consciousness. Especially prominent figure among the trolls was spawned by antimatter Dirac, who used his highly anisotropic intracranial ganglion to construct wild equations in quantum fields of negative relativistic energies in order to introduce the term “antimatter” to everyday life of already amicable physics community.

The essence of the conflict was the answer to the question, is the world really driven by fundamental uncertainty or we simply don’t know some parameters of microparticles, which, if measured, could 100% predict the behavior of quanta.

EPR paradox

When Einstein realized that his beams of hate are successfully annihilated by Bohr’s contrary beams, he came up with (as he thought at the moment) final solution to the quantum question.

In 1935 Einstein together with Boris Podolsky and Nathan Rosen published an article called "Can Quantum-Mechanical Description of Physical Reality be Considered Complete?", in which they proposed a thought experiment that was later named Einstein–Podolsky–Rosen paradox (EPR paradox).

According to Heisenberg’s uncertainty principle it is impossible to measure both the location of a particle and its momentum. Assuming that the reason for uncertainty is the fundamentally unavoidable disturbance of the particle’s movement during measurement of one of the parameters leading to unpredictable distortion of the other parameter, it is possible to suggest a hypothetical method allowing to bypass the fundamental limit to the accuracy of measurement.

If two particles were born from another particle’s decay, then their momentums will be correlated. That provides the opportunity to measure momentum of one particle and using momentum conservation law calculate momentum of the second particle without distorting its movement. Therefore if we measure the position of the second particle we will have values of two simultaneously unmeasurable values, which is impossible according to QM. This leads to the conclusion that the uncertainty principle isn’t absolute, QM laws are incomplete and must be refined in the future.

Bohr’s response followed shortly: in this case we must count both particles not as independent, but as a single quantum system. Therefore measuring the speed of one particle, we interfere with the other and the wave function collapses. Einstein called it "spooky action at a distance".

In the end holy war transitioned into a state where everybody reserved their own opinion, but didn’t have any solid arguments. Only an experiment could show who would suck whose what, but to everybody’s benefit nobody knew how to conduct such an experiment.

Bell inequality test

In 1951 physicist Bohm came up with a plan for an experiment that could finally help scientists settle the issue. In 1964 another physicist Bell playing with math came up with an equation named after himself, which allowed to formalize the problem and finally decide whos the biatch. As is common in this type of situation, not many understood this test, and even if they did, they couldn’t explain it to others. Still, thanks to this artifact it became possible to experimentally obtain a certain value that shows the correlation between remote measurements, and based on that establish whether it makes sense to describe quantum events as probabilistic or deterministic, thus ending the quantum holy war.

The main idea behind the experiment was this: in QM a system of entangled particles is described in such a way that contrary to the second postulate of special relativity regarding maximum speed of interaction, they keep instantaneous interconnection through time and space. Bell inequality test allowed to establish whether this instantaneous "action at a distance" really takes place or the system could only be described via principle of locality – meaning that after flying apart particles can only affect each other with a delay. The beauty of Bell inequality test is that it allows strictly mathematically excluding any class of theories based on locality – if the experiment confirms propositions predicted by QM.

The happiness was so close, but conducted in 1972 in the University of California experiments documented violation of the Bell inequality and therefore proved that QM was correct. As always, everybody cheerfully acknowledged the results, but didn’t agree what they really meant. The holy war continued.

Quantum entanglement

So, Bell inequality was experimentally disproven, EPR paradox explicitly confirmed correctness of QM. Heisenberg’s uncertainty principle is unbreakable, ergo in the conditions of EPR paradox interfering with momentum and position of one particle inevitably affects another one, however far it is. The postulates of relativity along with all classical concepts were put in serious peril, which many chose to ignore. QM, it seems, once and for all became a non-local theory.

A condition when a change of state of one subsystem affects the other is called quantum entanglement.

In the condition of quantum entanglement, interaction of subsystems is described as a single superposition, which isn’t localized in a certain place in space. A process, when as a result of interaction with surroundings non-local superposition transitions into local classical state, is called decoherence, a reverse process - recoherence. This phenomenon is the basis for quantum computers, whose performance, unlike regular computers, rises exponentially.

The gist. Imagine that Alice and Bob got themselves a couple of devices. Each device has one button, one press counter and one display, showing either 0 or 1.

Alice pressed the button five times and sequentially received:

1 0 0 1 1

Bob pressed the button five times on his device and sequentially received:

1 0 0 1 1

So the values on display are random, but the second device repeats them precisely. Randomness and correlation of results are verified statistically. It raises the question, how does it work? Maybe information about values is stored inside the devices. Maybe, the first device generates a random value and sends it to the second. In case of QM-entanglement, both answers are wrong. The fact that the information isn’t stored and there are no “hidden variables” is verified through Bell inequality. Signal transfer is also impossible, because entanglement passes instantaneously (Alice and Bob can travel to different cities, then simultaneously press the button on their devices and get the same result), i.e. faster than the speed of light.

Unfortunately, this device can’t be used to transfer information faster than the speed of light. Alice flies on a rocket and wants to inform Bob (exactly 24 hours after the launch) that everything is ok (“1” – ok, “0” – problem). 24 hours later Bob presses a button and sees 1 0 0 and so on. How can he tell from these values that Alice is ok? He can’t. The values are random. The fact that his device shows 1 0 0 doesn’t correspond in any way to Alice’s situation. So, it’s impossible to pass the information faster than the speed of light. And thanks for that, or it will be possible to send information in the past and violate causality (according to general relativity, not QM).

But this device allows creating an ideal channel for passing secret information. Alice and Bob want to meet, but Bob’s jealous husband intercepts their communications via trojaned skype. Having suspected that, Alice and Bob agree beforehand to press the button 8 times and add this value to the room number in the motel, mentioned in the conversation, if the value is 00000000 then press 8 more times. Alice calls Bob. They press the buttons and get 10011011 aka 155. Alice says: “Let’s meet in the motel “Wet dreams” in room 178.” As a result, the infuriated husband breaks into room 178, while Alice and Bob discuss quantum mechanics in room 23 (155+23=178). The husband can’t find the correct room number without seeing the values on the device. And he won’t find anything if he examines the device before the conversation, since the values are random. Why is this method better than two-way asymmetric encryption, which also allows for open channel and known encryption algorithm? Because it is a bona fide Vernam cipher with infinite OTP keys. And this is the only encryption method mathematically proven to be unbreakable.

The Quantization of Gravity

After playing with QM for a while, physicists said: “This is all really nice, but what are we going to do with the electromagnetic field?” And in order to seal the victory decided to quantize the electromagnetic interaction. Clarification: to “quantize” means to describe an interaction as an exchange of some hypothetical particles – force mediators. In quantum field theory these particles are called “virtual”, because they are born and die so fast that they can’t be detected, even though they are real. Fourier series expansion, renormalization and few other unholy rites quantized electromagnetic field without breaking a sweat. The theory also successfully described the weak interaction.

But when scientists got to gravity – all the crazy math they made up to quantize fields proved to be completely useless, infinities and absurd probabilities were oozing out of every orifice. The problem is this: in general relativity gravity is described as the curvature of spacetime, not a field like the electromagnetic force. First problem is the absence of a “sign” of the “charge” of the gravitational field, i.e. mass; the second problem – to quantize gravity means to quantize space itself, assuming its discreteness. Clarification: discreteness of a certain value means existence of indivisible minimum, or, for space, of minimum possible length of measured interval. Actually, in quantum gravity theories this limit exists and is called Planck length. But special relativity claims that as an observer's speed gets closer to the speed of light the length of any segment decreases, in the limiting case compressing into a dot (at the speed of light). And the same obscenity happens to Planck length, rendering the idea pointless.

This tragic mishap completely gridlocked fundamental physics up to this day. Both theories have been experimentally proven right, but the Holy Grail of physicists – “unified field theory”, is yet to be discovered. That’s where all the GUTs and string theories come from. Physicists continue inventing tons of new gory math, but there’s a problem with experimental data – to test these new theories they require such wunderwaffles, that even very kind sugar daddies with billions of moneys will think twice before spending a fortune on an experiment, that won’t necessarily give any results. And even if it does, there’s no guarantee that these results will have any real value, except academical. Also the construction of such mega-devices faces some practical challenges – the required energies exceed the capacity of current humankind, and so physicists have to make do with observation of indirect effects in outer space, thankfully like energies sometimes occur there.

Interpretations of quantum mechanics

Correlations have physical reality; that which they correlate does not.

David Mermin in scientific language

The truth is there is no spoon.

Translation to common language

Interpretations of quantum mechanics are an attempt to answer the question: “What does quantum mechanics actually tell us about the world?” QM is considered the “most tested and most successful theory in the history of science”, but the main question – what is its deeper meaning – is still open.

Not being able to agree, what is actually happening in the microworld, scientists offered over 9000 versions of Deeper Meaning of the theory. In other words, if you show the same equations to two physicists, they will nod in agreement, but if you ask them to explain the equation with their mouths, they will most likely kick each other’s teeth out.

- Copenhagen interpretation – the most popular interpretation in modern QM. States that in QM the result of measurement is fundamentally indeterministic, and the probabilistic nature of predictions is unavoidable. The Copenhagen interpretation doesn’t bother to ask “where was the particle before I registered its location”. Its followers believe that its the process of measuring that randomly chooses strictly one possibility out of all allowed by the wave function of a given state, and the wave function itself instantaneously changes to reflect this choice.

- Many-worlds interpretation, aka Everett interpretation – the interpretation of QM that assumes existence of “parallel universes”, the same laws of nature and the same constants apply to all of them, but they exist in different states. Whenever a quantum experiment is being conducted the universe splits into as many universes as there are possible outcomes to the experiment, and each outcome is 100% achieved in one of them, while the observer, who happens to be in only one of them at a time, witnesses his one specific outcome only. In the case of “two-slit” experiment this happens: as a photon approaches the slits the universe splits and the photon will go through the slit that the observer is watching (actually it will go through the other one as well, but there’s noone there to witness it). According to QM opponents, this theory is the most sci-fi of them (actually they consider entire QM sci-fi). Nevertheless many prominent scientists acknowledge its right to exist.

- Hidden-variable theories try to explain the results of experiments by incompleteness of our knowledge of the microworld. Fairly logical in their core ideas don’t confirm experimentally, and carried out tests of Bell inequality ruled out the existence of a wide set of hidden-variable theories (basically all explicitly local theories). Unfortunately, other, explicitly nonlocal theories, even though they don’t contradict the experiments, fell victim to physical profiling.

- Transactional interpretation states that the particle sends a request in the future (

retardedmentally-challenged wave) and receives a response (advanced wave). The degree of phase coherence determines the amplitude. Square of amplitude determines probability of event. This approach solves the problem of the experimenter (Shrodinger’s cat and Einstein's mouse). Free will goes out the window. - Superdeterminism tries to explain the results of experiments by absolute determinism of everything at all. Just like with transactional interpretation, free will goes out the window, but there’s no need to send any requests to the future, because all events in the universe have already been predetermined. Therefore, the device that breeds the quantum-entangled pairs of particles for another physicist-experimentator knows beforehand, which experiments he is going to subject them to and adjusts their properties in such a way, that the result of the experiment doesn’t contradict QM. Scientists don’t particularly like this interpretation: first it means that the Universe is basically trolling them, second full determinism makes the entire science pointless, because nothing really depends on the scientists. Nothing depends on anything at all actually, even the sky, even Allah! Just total fatalism and melancholy.

- Also some physicists choose the “silent” interpretation of QM comprehensively expressed in David Mermin’s aphorism "shut up and calculate!".

Some, however, didn't stop here and stated that there is no universe at all, everything is just a figment of the noble sir's imagination. This elegant solution easily explains the gibberish achieved in calculations and experiments.

Astral

When astralopithecuses learned that there’s something about this world that even scientists can’t explain, they started intensely consuming the subj, but, as is often the case, mostly consumed tons of weed, assimilating the terms while ignoring the content. As a result:

- The paradox of the observer, that works only for electrons, SUDDENLY started applying to the real world, and allows those who know the Secret grab the universe by its tits.

- Probabilistic nature of particles became proof that everything is possible. Many-worlds interpretation of Shrodinger’s equation means that parallel universes actually exist and any second now Ktulhu is going to climb out of them.

- Quantum entanglement according to these nirvana-dwellers means the possibility of teleportation, even though physics states that teleportation is entirely impossible.

Therefore every aspiring Guru feels the need to proclaim that quantum mechanics is the scientifically proven evidence, that everything he teaches his padawans is true. There’s no escape, and even a prayer said on a 10th grade physics textbook doesn’t always help.

Also, advanced influencers noticed that the word “quantum” affects brains even better than the prefix “nano”, and makes any article sound more scientific and important.

Current state

It’s very hard to talk quantum using a language originally designed to tell other monkeys where the ripe fruit is.

Lu Tze

At the moment QM is considered the most verified (and most paradoxical) scientific theory that ever existed. Despite the decades of brainstorming, nobody understands how it works. At the same time the main proof that it does is the fact that you are reading this article right now. Since the QM became the theoretical basis for semiconductor electronics.

The main problem of modern physics is uniting QM with theory of relativity, producing the theory of everything, because at the moment they are about as agreeable as Bohr and Einstein.

There are few possible candidates for the title of the final theory, like loop quantum gravity and of course the string theory. But the story is still far from over and the answer to the Ultimate Question of Life, the Universe, and Everything remains unknown.